EDIT: Sorry, Mods, but please move this to a more appropriate section - as I tried posting this like 8 times, with various glitches like overlay closing, links not posting, picture uploads denying my browser plugins… worst of it all was the “overlay closing” part, glad I always make backups… Anyway, with being slightly drunk I, apparently, just had to mess the section selection part the last time - when I finally managed it that is ?

So, a homebrew game when you know virtually nothing about the game development - that's gotta be fun! And as far as I, as a hardware engineer, can't really have too much fun with the hardware (that industrial safety stuff is responsible for not blowing things up, better not get too playful there) - that definitely was a must-try for me. Who cares we don't know a single engine? We know how to simulate and go DSP (even via GPGPU)! We aren't that good at art - yet we are used to CAD, CAM and SCADA. As expected, I came across some things I really have no idea what (and why) are they for, and likely the conclusions are worth sharing!

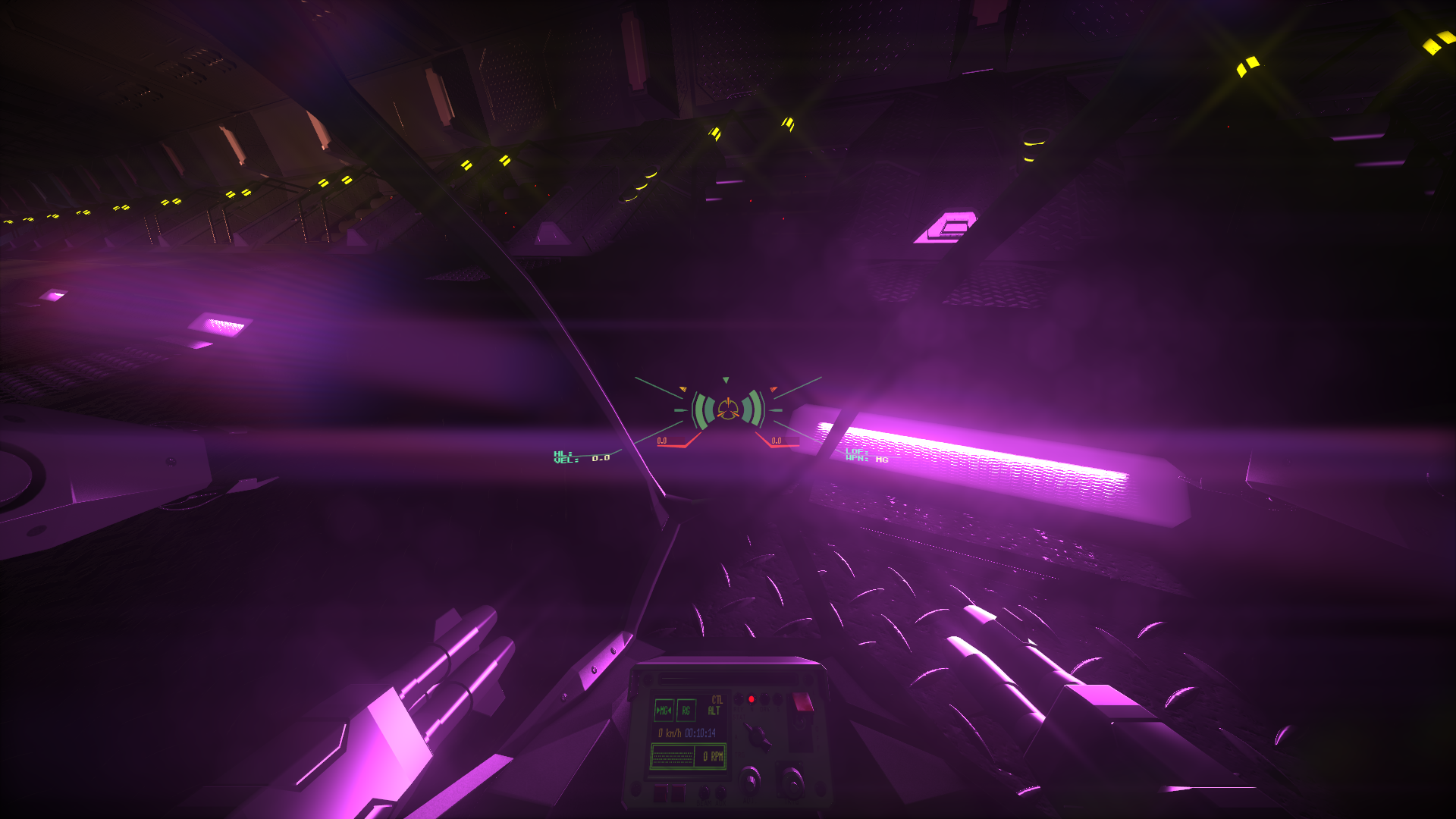

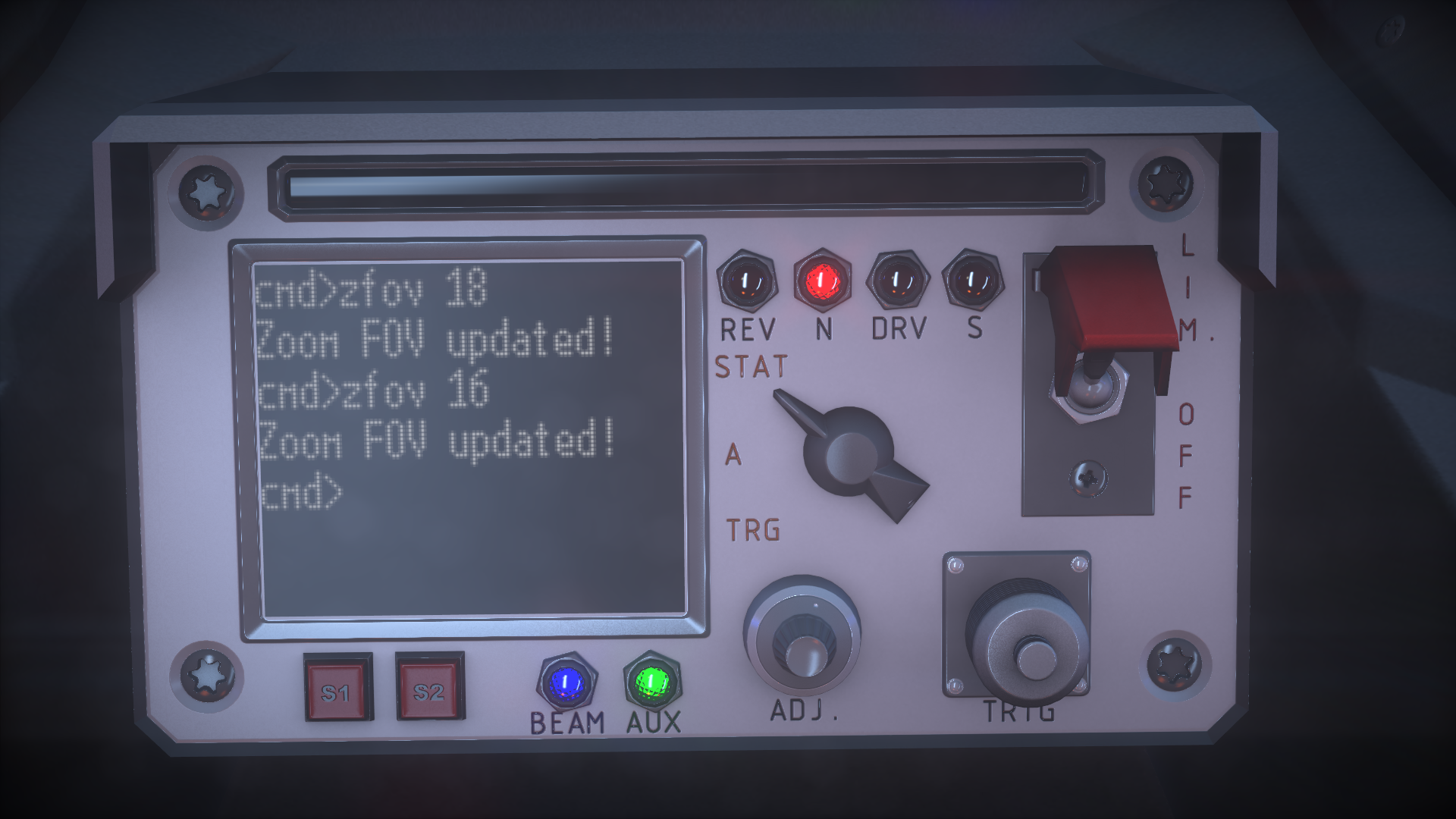

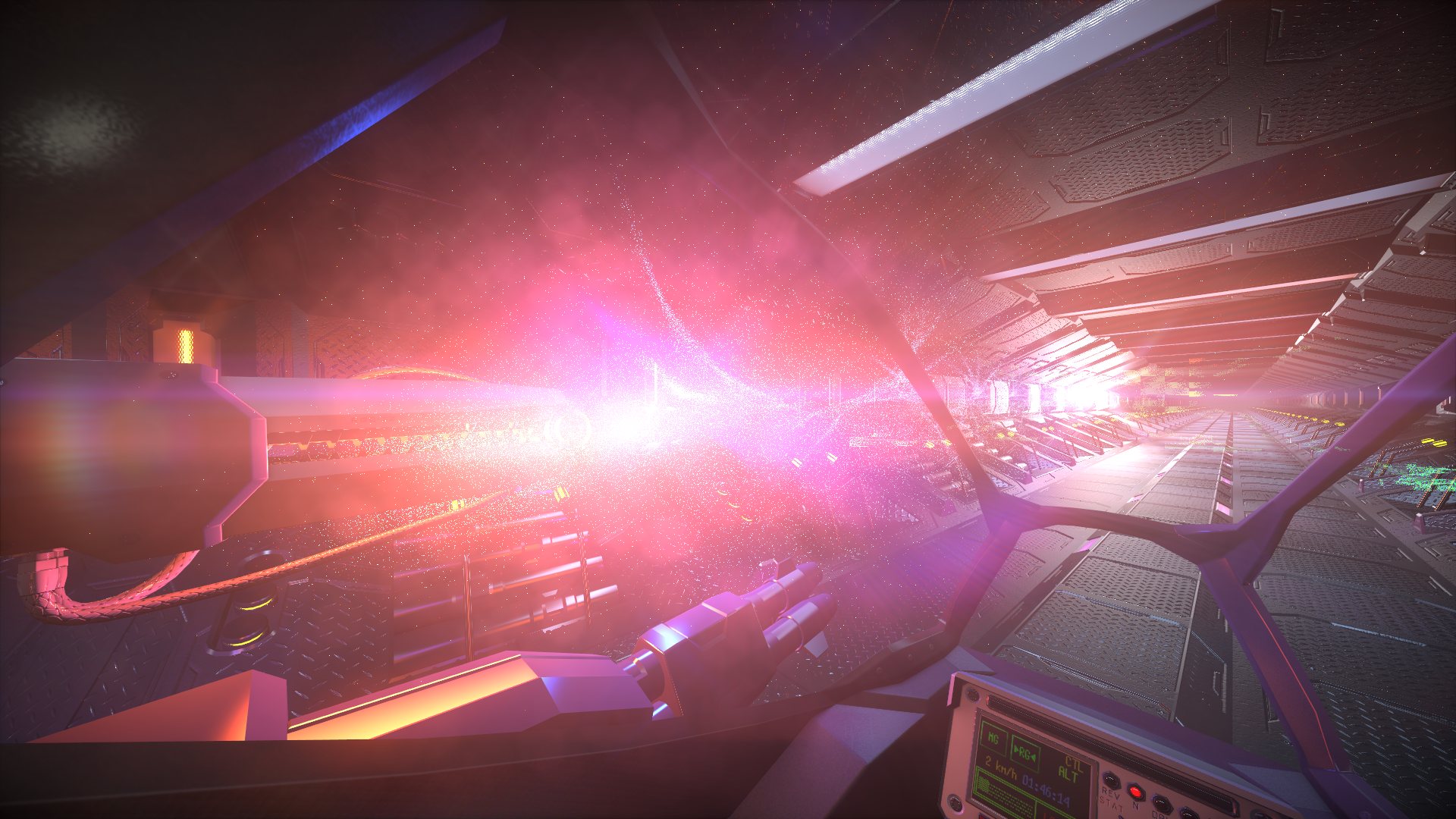

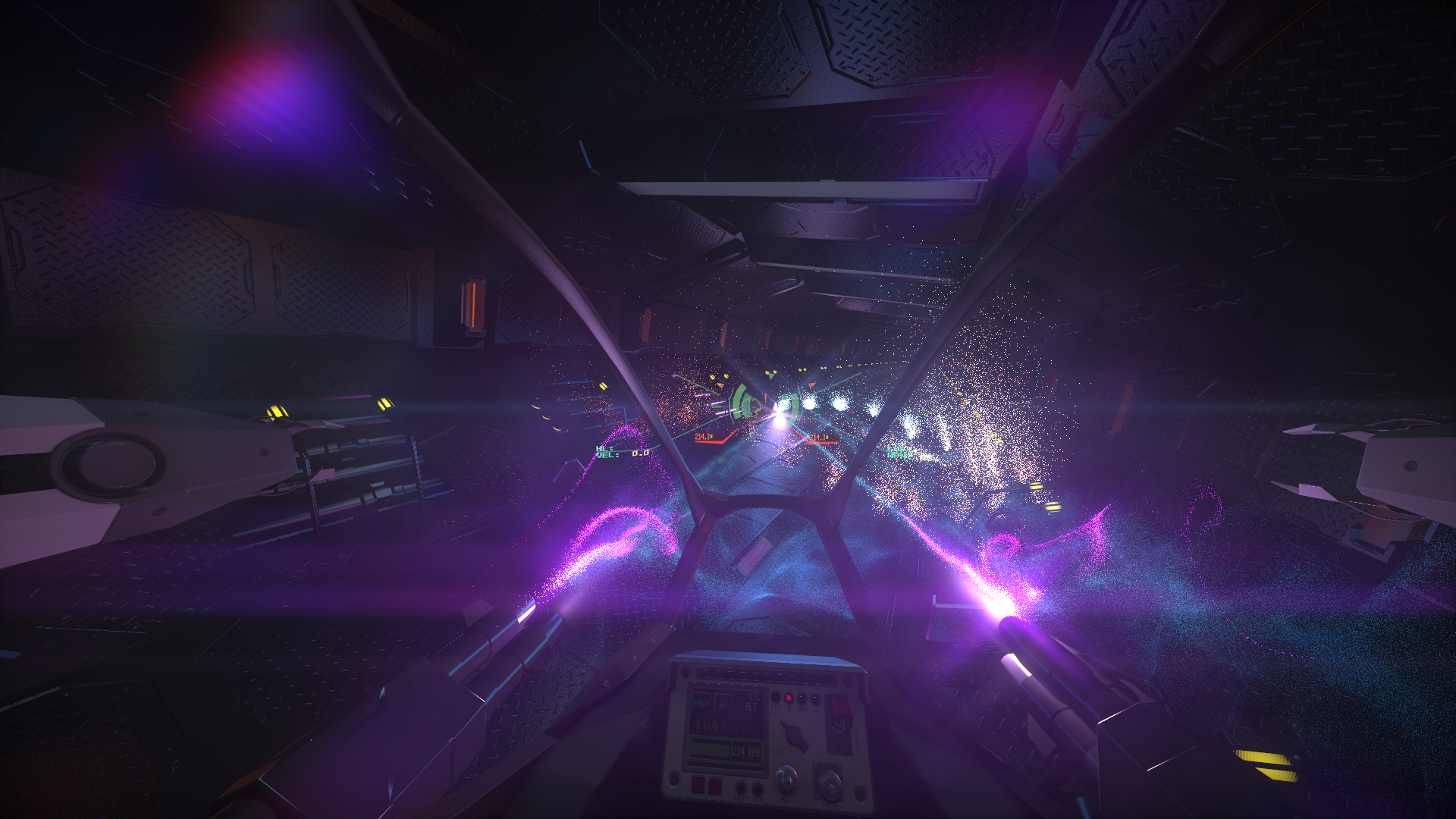

HDR and lens systems. I read some articles, and everyone always mentions photogrammetry and log-averages when it comes down to adaptation. No idea, why. Your camera, your eyes, your welding visor - they all react to the inrush power, which is basically your pre-HDR linear pixel color value! Two pixels are twice of light compared to one pixel, so just a linear average is what we need, while the log-average is to be left for the film scans. As for the lens, I noticed many projects still use the concept of "bright-pass", while many lens effects still rely on sprites and textures. Why? We got a lens system that is supposed to focus the rays on the receiver, physically converting angles to coordinates therefore. Some fixed fraction of light comes to a wrong point due to secondary reflection and such, so this is just begging for a cascade filter with some constant gain! Also the pyramidal filter seems to be forgotten nowadays… Ok, let's try: just obtain a non-clamped, linear-coded frame, create a low-resolution copy, premultiply by the leakage gain. The screen dust is just a noise generator (which can also simulate randomized rays by randomizing the lookup coordinates) - and do not forget the separable Bokeh filter to de-focus the dust and the ghosts. The results:

Why go deferred? In a forward rendering path, you can just assign every draw call the desired set of lights, getting MSAA and depth control as a present. SSR and SSAO are worth a short deferred path though (with the first one, and hopefully both, soon to go with the raytracing becoming a typical thing).

Shadows. What we usually get are PCS along with a bias and inside-out rendering (which can cause light leaking). Why? A combined Gauss-bilinear filter and a depth estimation algorithm (and no need to inside-out anymore!)… And down this bias to just enough to make the comparisons strict ?

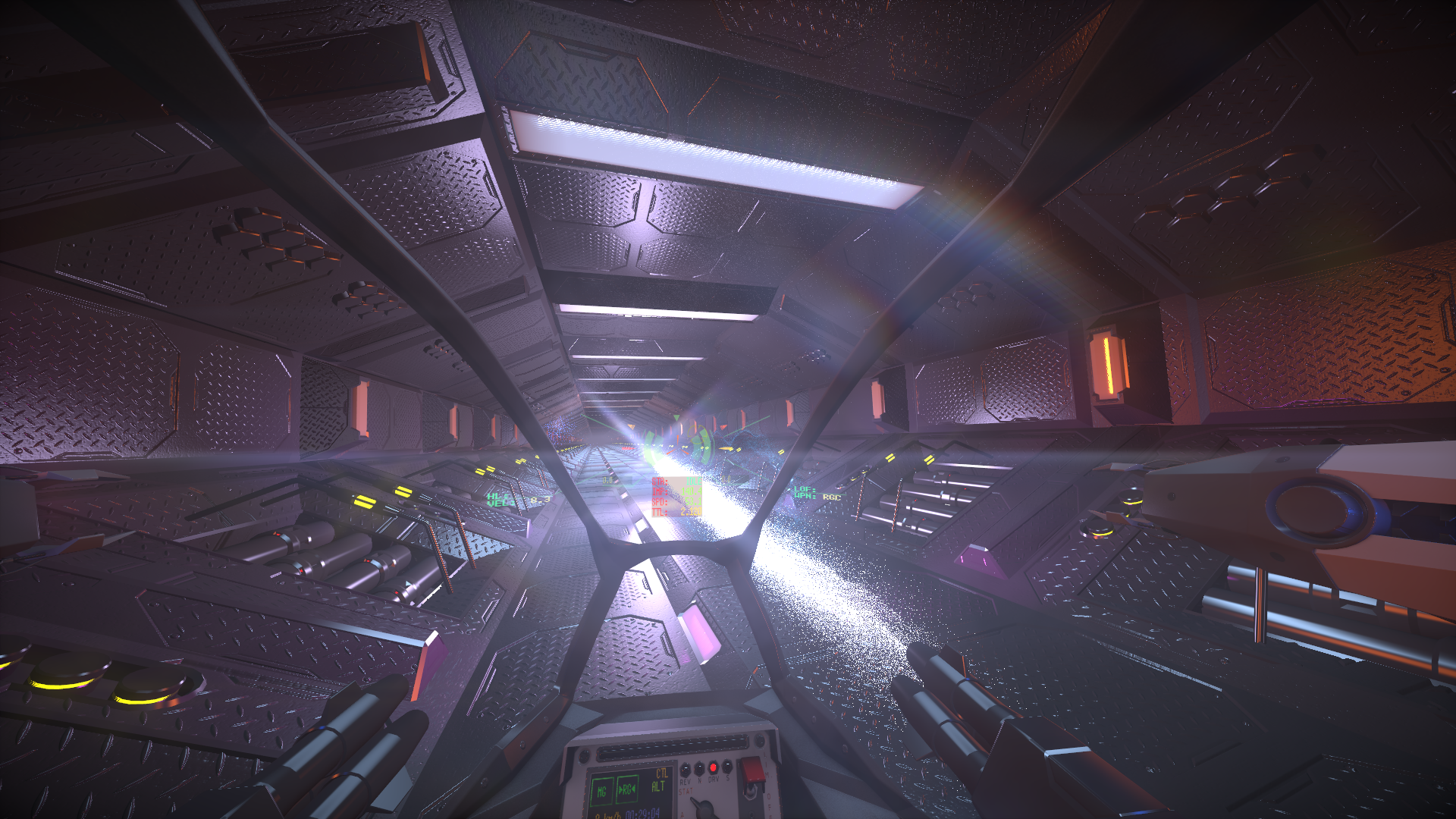

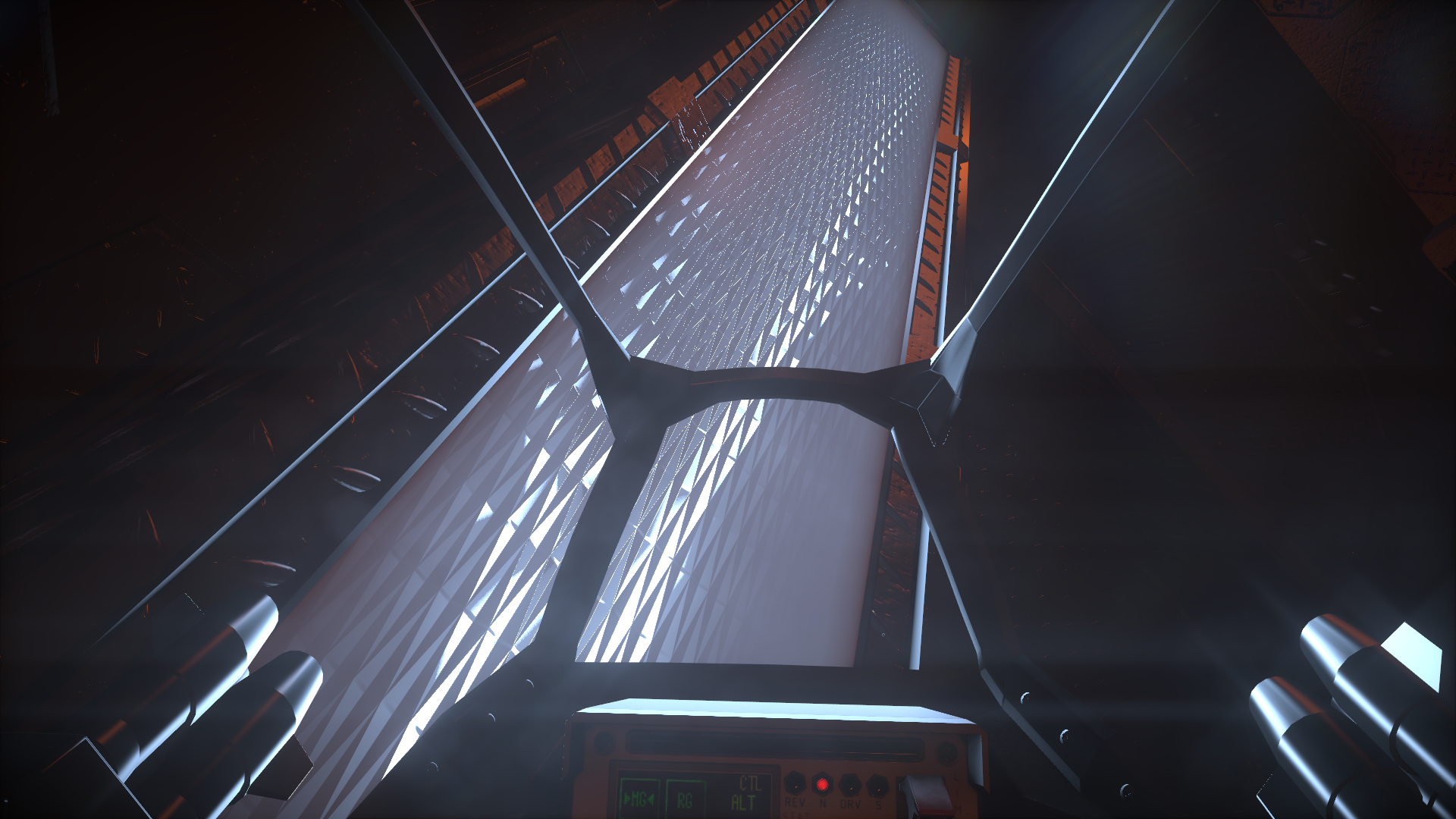

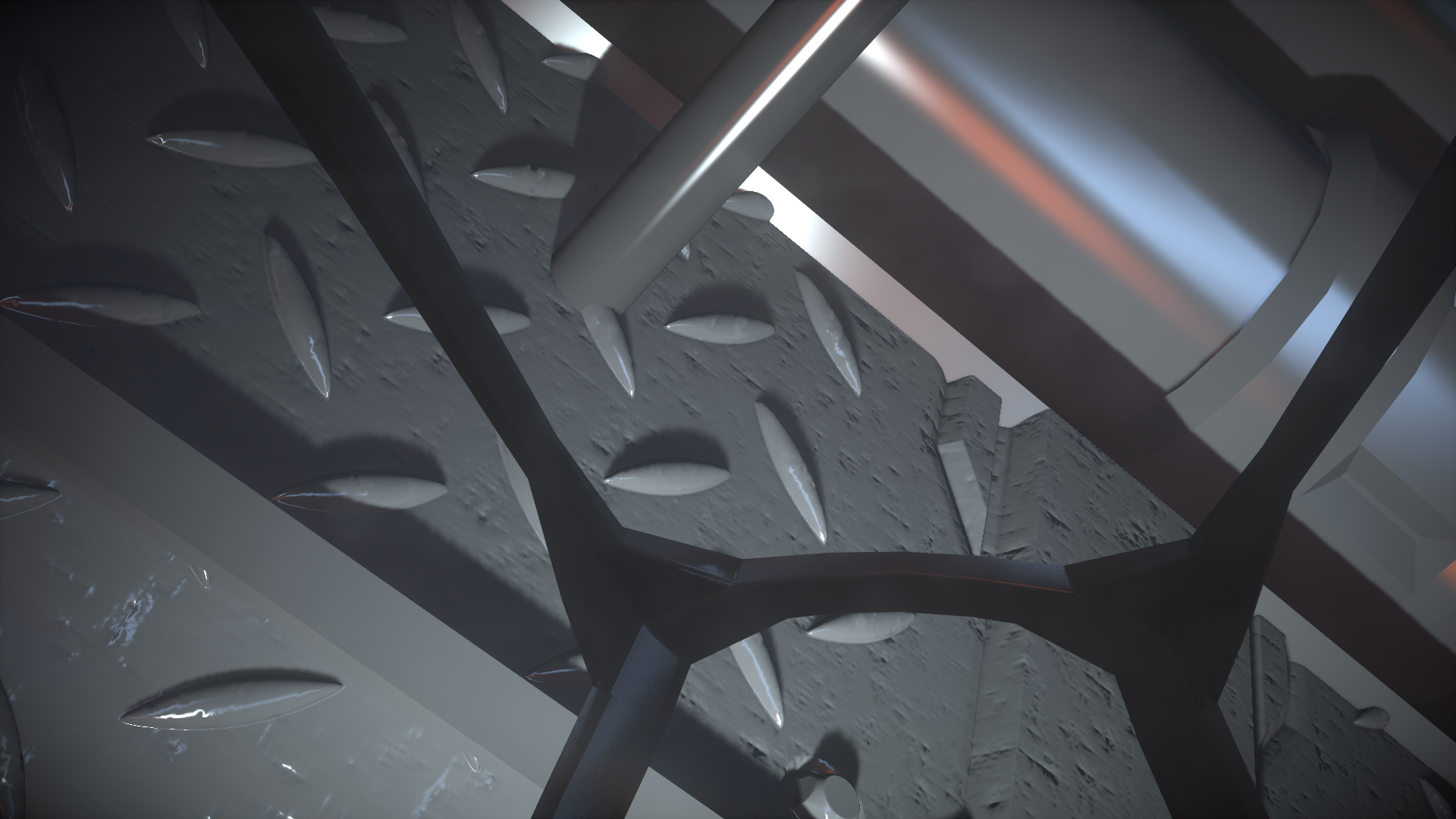

Tesselation, POM etc. Why, when you can raymarch? As a bonus, you can raymarch through light covers and trace the final ray against a sphere or something to achieve a neat lightbulb simulation! Tesselation is somewhat slow - hey, even a GS itself is a rise in rendering times! POM is prone to layering artifacts - yet a careful raymarching combines accuracy and speed. And even more of a bonus: with the forward rendering you can get the raymarched faces clip any geometry (including each other) and drop and receive the correct shadows without any tricks!

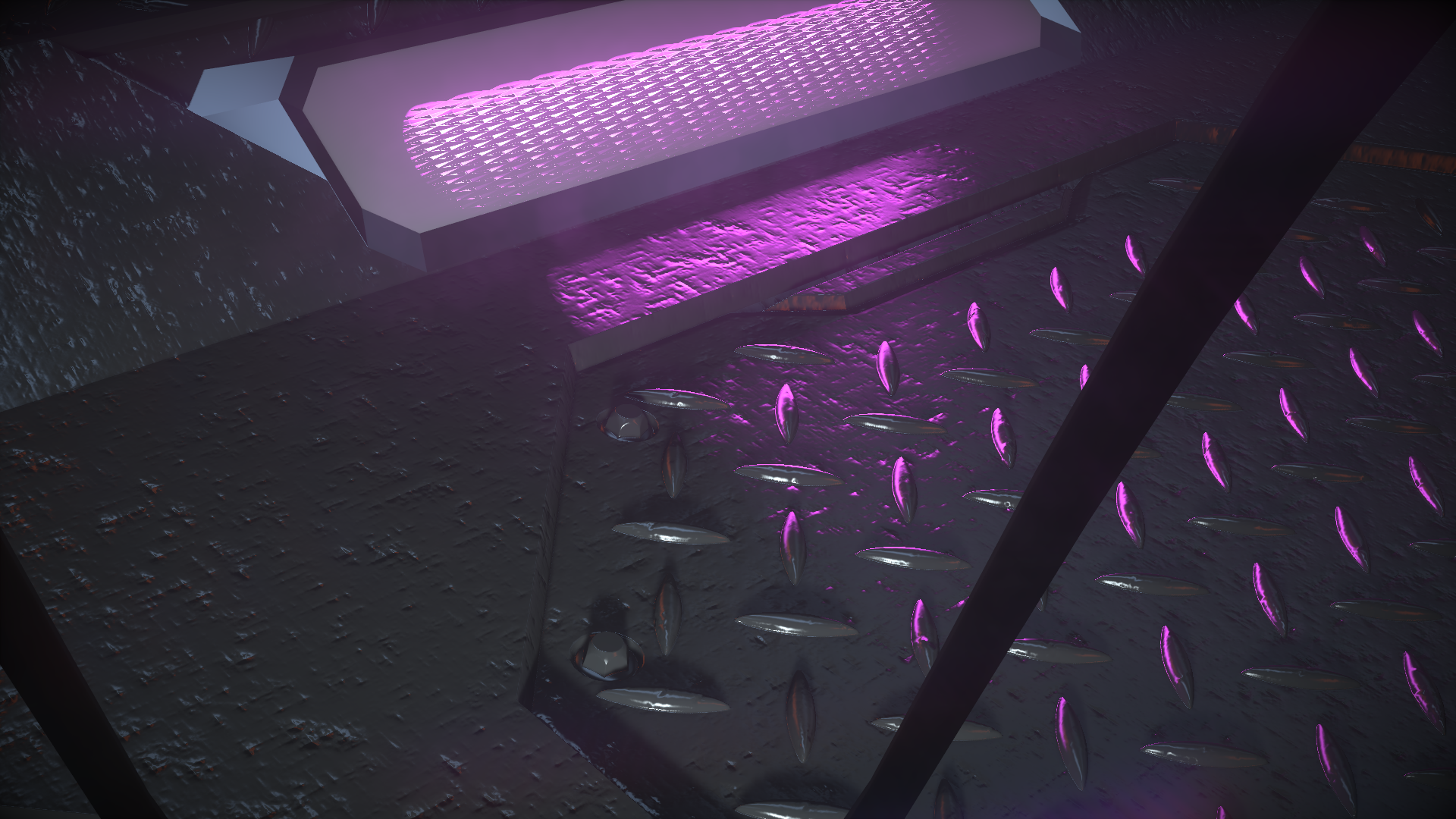

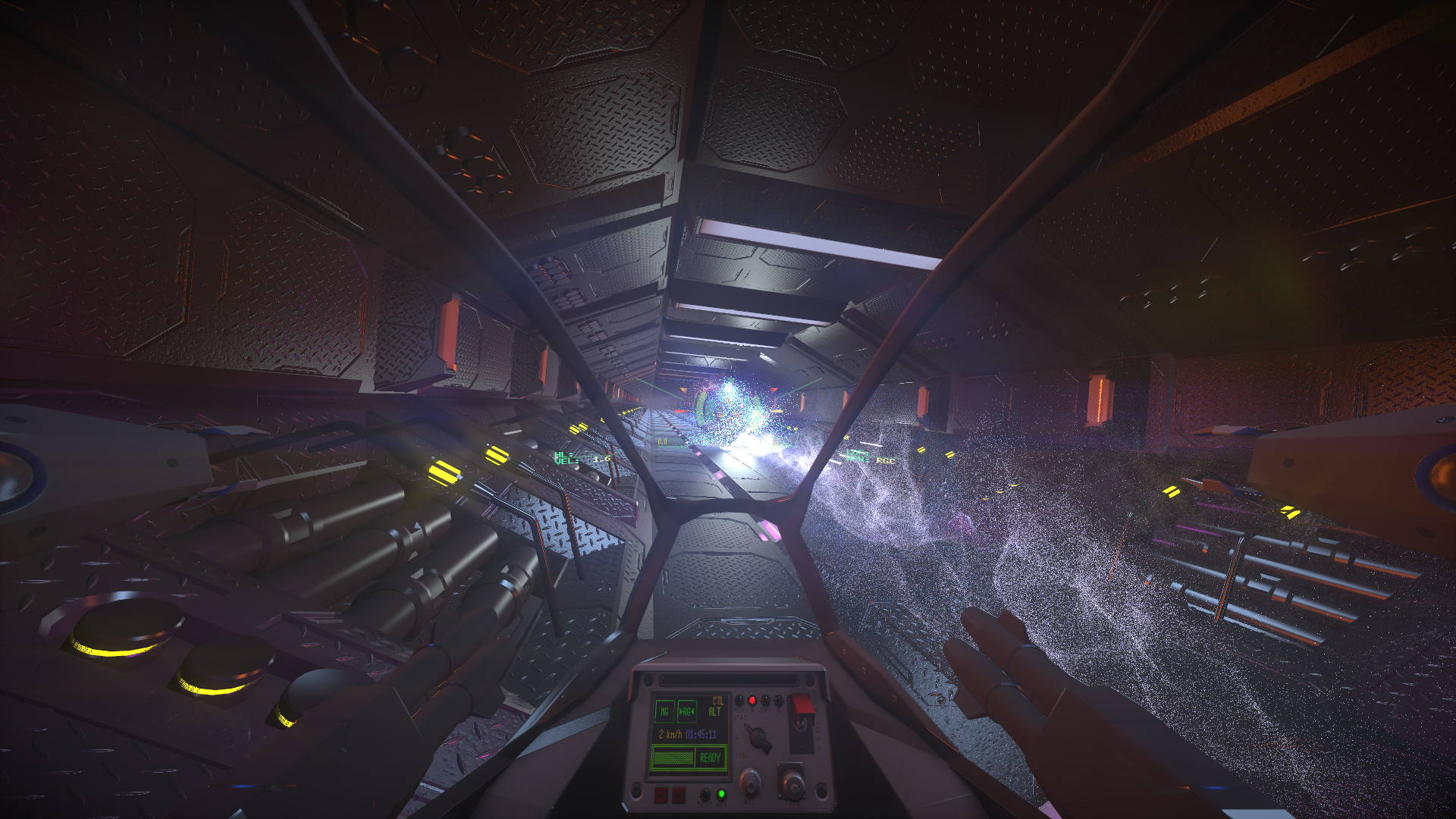

Particles. Quake II had them. Nothing has them now - in the expected quantities that is. Let's try to use them, they look fun ?

No normal-mapping “old-school”, and only TBN maps. Ok, TBN first. For the models, TBN and model-space maps are virtually of the same computational cost. Model-space are more noisy, however in average are also much more accurate when it comes down to the sharper reflections. For the “old-school” texturing, painting surfaces with tilted and non-uniformly scaled maps that is, let's just use a non-orthogonal scaled TBN vector set. Works for the raymarched normal/offset pairs too, of course.

If wondering, you can find a free tech demo on my Patreon account: https://www.patreon.com/projectconstraint (I want to raise some to also get a few VR headsets to finally get this running in a legit VR rather than a current-state crossed-eye mode, which I utterly recommend to try by the way - just don't forget to adjust one's settings as it requires a correct screen size and other distances to be set in order to perform correctly).